In this module you will learn the basics of AI. You will understand how AI learns and what are some of its applications.Learning objectives

- Define basic AI concepts.

- Explain Machine Learning, Deep Learning and Neural Networks.

- Explain the Application areas of AI.

Definition Machine Learning (ML):

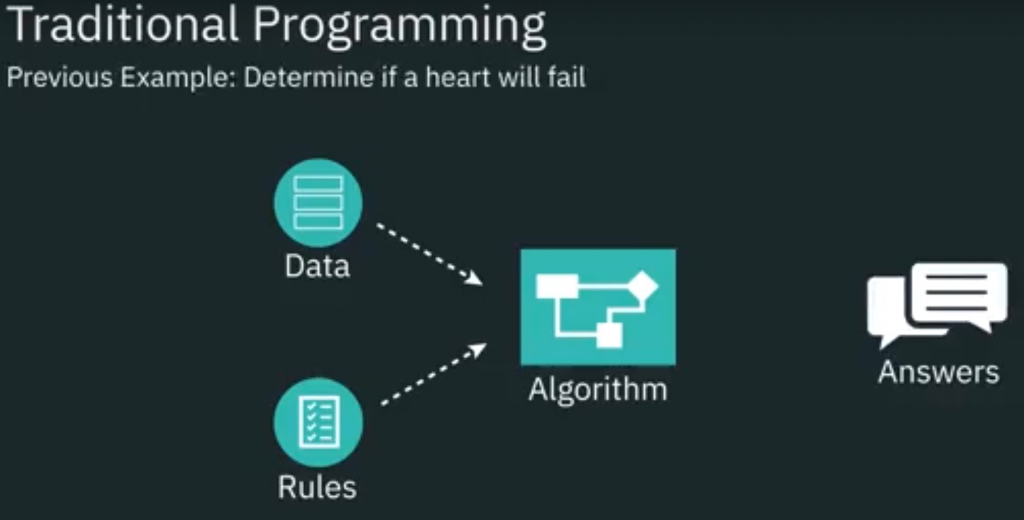

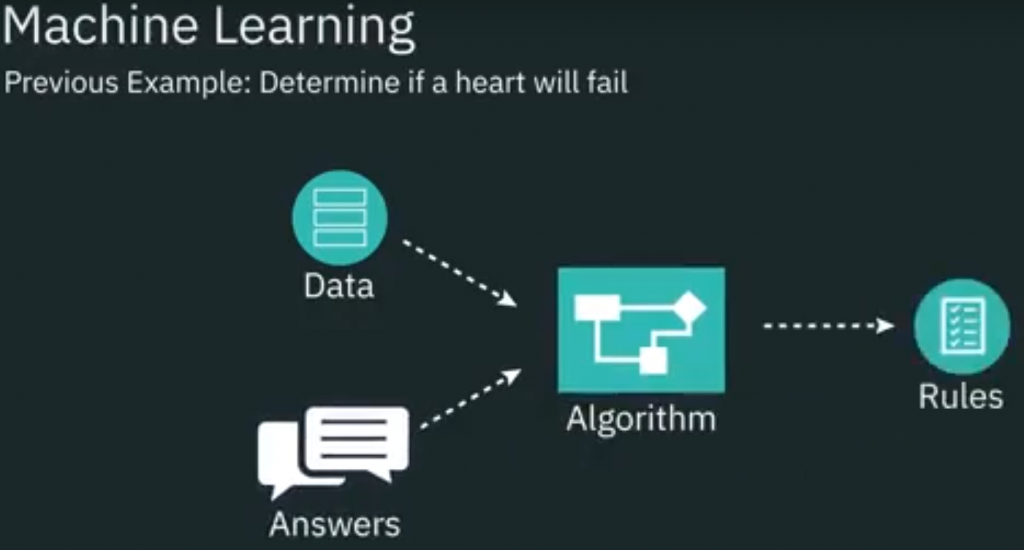

A machine learning model is the algorithm used to find patterns in the data without the programmer having to explicity program these patterns.

Main difference between Machine Learning (ML) and Statistical Algorithms Analysis:

Main Ideas about Machine Learning (ML):

- We can split ML, this broad concept, in 3 main categories: Supervised, Unsupervised and Reinforcement Learning.

- Supervised Learning (SL): refers to when we have class labels in the dataset and we use these to build the classification model.

- We can subdivide (ML-SL) into three categories:

- Regression: Regression Models are built by looking at relationships between features “X” and the Result “Y”, where “Y” is a continuous variables. Essentially regresion estimate continuos variables.

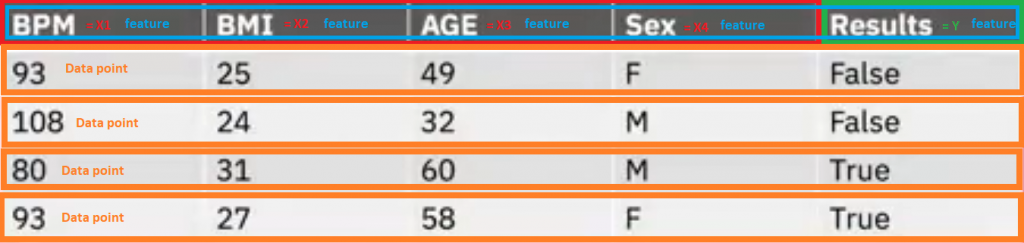

- Classification: Focuses on discrete variables it identifies, we can assign discrete class labels “Y ∈ {True,False}” based on many input features “X1,X2,X3,X4” (see picture below). So classification is the process of predicting the class of given data points.

- Features: With classification we can extract features from the data (X1,X2,X3,X4), where we can define that the features “are distinctive properties of the input patterns that help in determining the output categories or classes of output”.

- Training: Refers to using a learning algorithm to determine and develop the parameters of your model. If you are training using ML-SL-Classification you should show to the AI as many as examples in order to train it, so the ML-SL-Classification will be able to properly classify new incoming data.

- Decision trees.

- Support vector machines.

- Logistic regression.

- Random forests.

- Others.

- Neural Networks: refers to structures that emitate the structure of the human brain.

- Examples:

- We can subdivide (ML-SL) into three categories:

- Unsupervised Learning (UL): refers when we do not have labels and we must discoverer class labels from unstructured data. In this ML subset where we use normally Clustering Methods.

- Reinforcement Learning (RL): is a different ML subset and what this does is it uses a reward function to penalize bad actions or reward good actions.

- Dataset that we have to use:

- Training sub set: is the data used to train the algorithm.

- Validation sub set: is used to validate our results and fine-tune the algorithm’s parameters.

- Test sub set: is data the model has never seen before and used to evaluate how good our model is. We quantify it with some of the followings concepts:

- Accuracy.

- Precision.

- Recall.

Main Ideas about Machine Learning (ML) – Deep Learning:

- Deep Learning: is one specialize subset of Machine Learning that consist layers algorithms to create a neural network, an artificial replication of the structure and functionality of the brain.

Main Ideas about Machine Learning (ML) – Neural Networks:

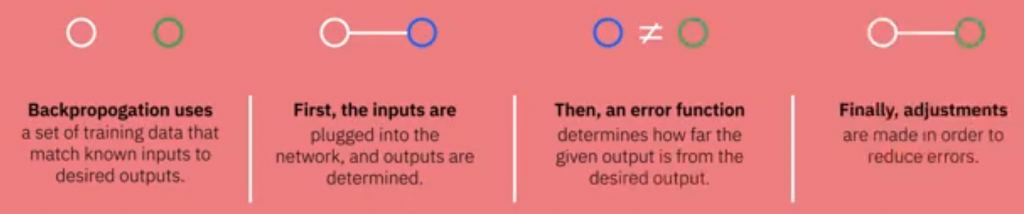

- Neuronal Networks (NN): learn through a process called backpropogation.

- Backpropogation use a set of training data that match known inputs to desired outputs.

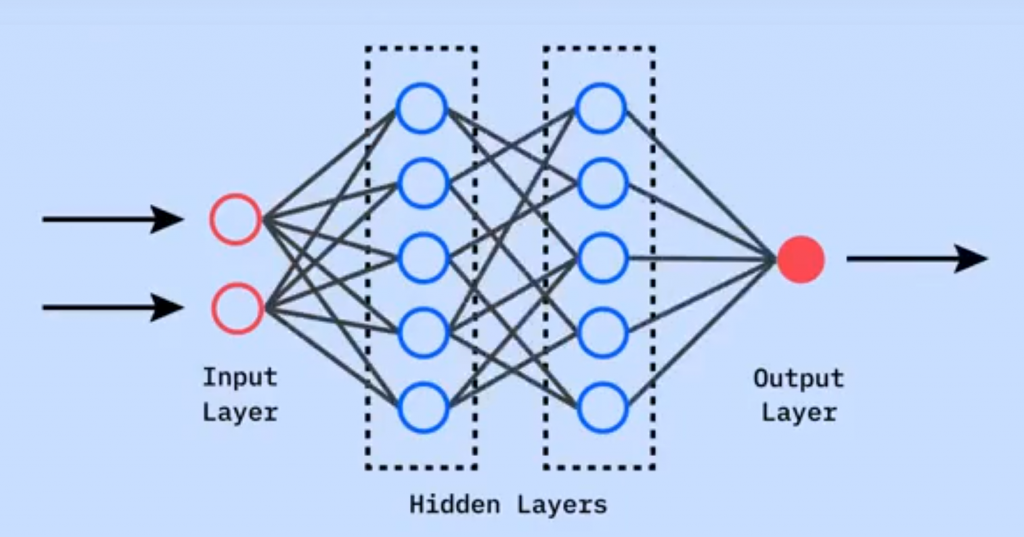

- Layer: a collection of neurons is called Layer, each layer take in inputs and provide an outputs. So any neural network has almost one input layer and one output layer. It could also have one or more hidden layers.

Hidden layers: receive a set of weighted inputs and it produces an output through an activation function.

A neural network with more than one hidden layer is refer as a Deep Neural Network.

Perceptrons: The simplest and oldest types of neural networks. They are single layered neural networks consisting of input nodes connected directly to an output node. Input layers forward the input values to the next layer, by means of multiplying by weight and summing the results.

Bias: Input and Output layer has this property that is a special type of weight that applies to a node after the other inputs are considered.

Activation function: Determines how a node responds to its inputs. It can take different forms and choosing them it is a critical component to the success of the neural network.

- Convolutions Neural Networks (CNNs):